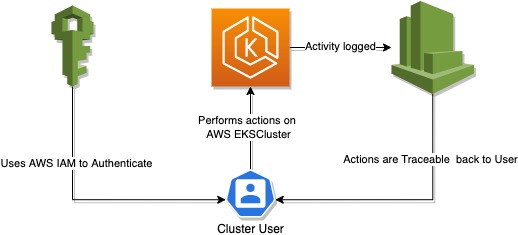

IAM User Traceability in AWS EKS

19 Dec 2021Today I would like to show how to trace IAM user actions in AWS EKS Clusters, what needs to be configured and what limitations exist. It is a good practice to have Audit log on your admin activities to make sure that no suspicious activities are happening. Audit log helps to investigate issues and write postmortems, and you will most probably need it for certifications like ISO 27001.

This post does not cover specific strategies for tracing and monitoring user activity, but rather adresses the setup required to log user actions in a traceable manner.

Default configuration

By default AWS EKS offers IAM user and role mapping to Kubernetes users, but it is managed with records in a ConfigMap. This process is not very convenient for automation out of the box, because one needs to write a yaml with all mappings as text into a predefined configmap.

It is common to map IAM roles to a cluster user, because this is convinient. A short demo below will show default capabilities of tracing user actions in AWS EKS.

This post assumes that we have a configured AWS EKS Cluster available.

Reference configuration

Here is a reference configuration of AWS IAM and EKS for the tests.

Test IAM Role name: arn:aws:iam::XXXXXXXXXXXX:role/eks-cluster-admin

AWS Auth ConfigMap:

apiVersion: v1

kind: ConfigMap

metadata:

name: aws-auth

namespace: kube-system

data:

mapRoles: |

- rolearn: arn:aws:iam::XXXXXXXXXXXX:role/eks-cluster-admin

username: eks-cluster-admin

mapUsers: |

- userarn: arn:aws:iam::XXXXXXXXXXXX:user/gennady.potapov

username: gennady.potapov

ClusterRole:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: eks-cluster-admin

rules:

- apiGroups:

- '*'

resources:

- '*'

verbs:

- '*'

- nonResourceURLs:

- '*'

verbs:

- '*'

ClusterRoleBinding:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: eks-cluster-admin

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: eks-cluster-admin

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: eks-cluster-admin

- apiGroup: rbac.authorization.k8s.io

kind: User

name: gennady.potapov

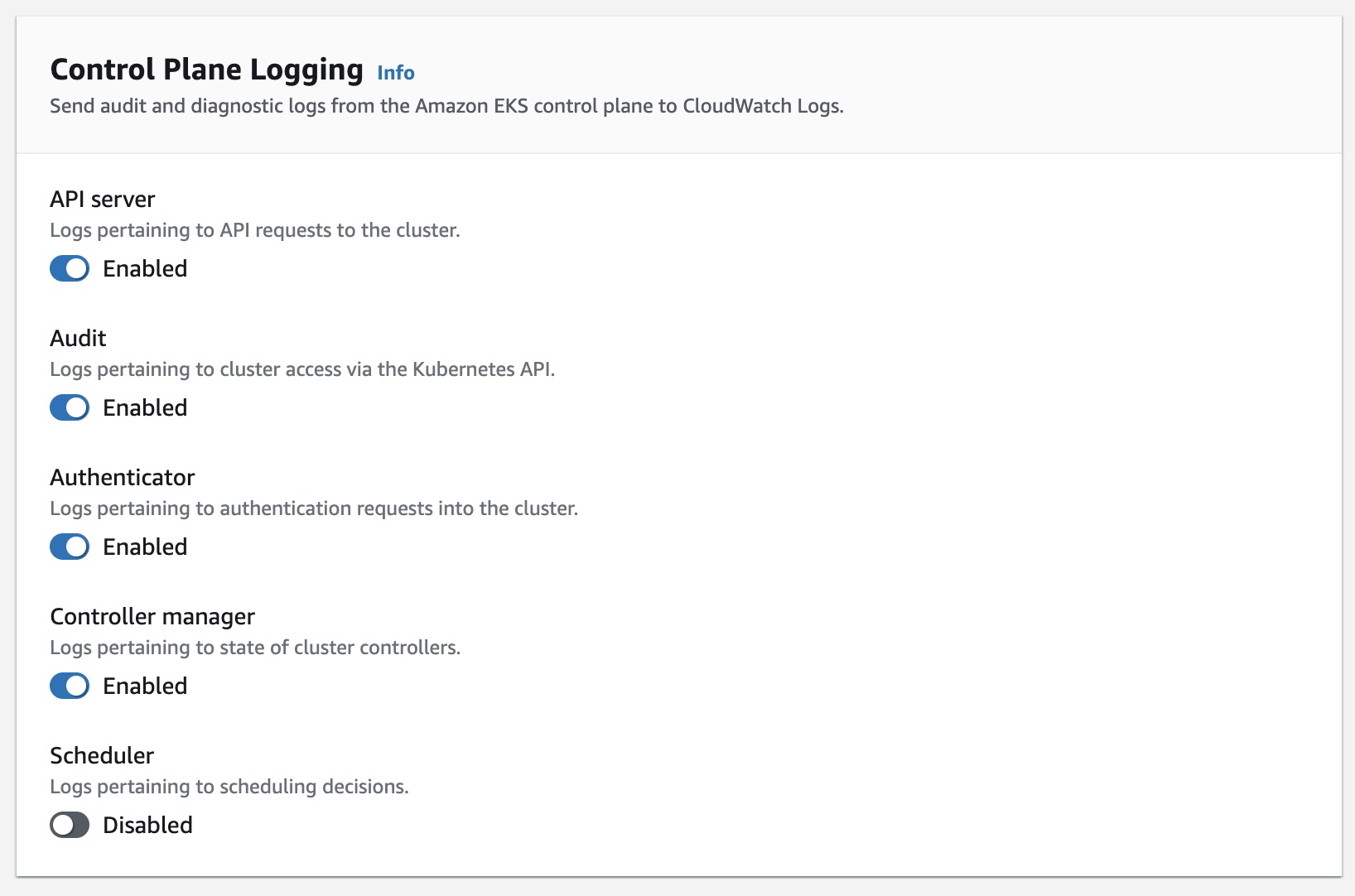

Enable cluster logging for Audit and Authenticator events, you can either do it with AWS CLI:

aws eks update-cluster-config --name <cluster_name> --logging

'{"clusterLogging":[{"types":["audit","authenticator"],"enabled":true}]}'

Or in AWS Console:

This configuration allows me to use one of the two options to Authenticate to EKS API:

- Using the

arn:aws:iam::XXXXXXXXXXXX:user/gennady.potapovIAM User - Using the

arn:aws:iam::XXXXXXXXXXXX:role/eks-cluster-adminIAM Role

Kubeconfig:

apiVersion: v1

kind: Config

clusters:

- cluster:

certificate-authority-data: XXX...

server: https://XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX.grX.us-east-1.eks.amazonaws.com

name: authcluster

contexts:

- context:

cluster: authcluster

user: userauth

name: userauth

- context:

cluster: authcluster

user: roleauth

name: roleauth

current-context: userauth

users:

- name: userauth

user:

exec:

apiVersion: client.authentication.k8s.io/v1alpha1

args:

- --region

- us-east-1

- eks

- get-token

- --cluster-name

- XXXXXXX

command: aws

env:

- name: AWS_PROFILE

value: XXX

- name: roleauth

user:

exec:

apiVersion: client.authentication.k8s.io/v1alpha1

args:

- --region

- us-east-1

- eks

- get-token

- --cluster-name

- XXXXXXX

- --role

- arn:aws:iam::XXXXXXXXXXXX:role/eks-cluster-admin

command: aws

env:

- name: AWS_PROFILE

value: XXX

Tracing EKS user actions with default configuration

Now, we will test our configurations to trace user actions. First let us start with the “userauthG context.

Tracing mapped users

Switch kubeconfig context to userauth: kubectl config use-context userauth

Perform some actions on the cluster:

kubectl get ns

# ...

kubectl get po -n kube-system

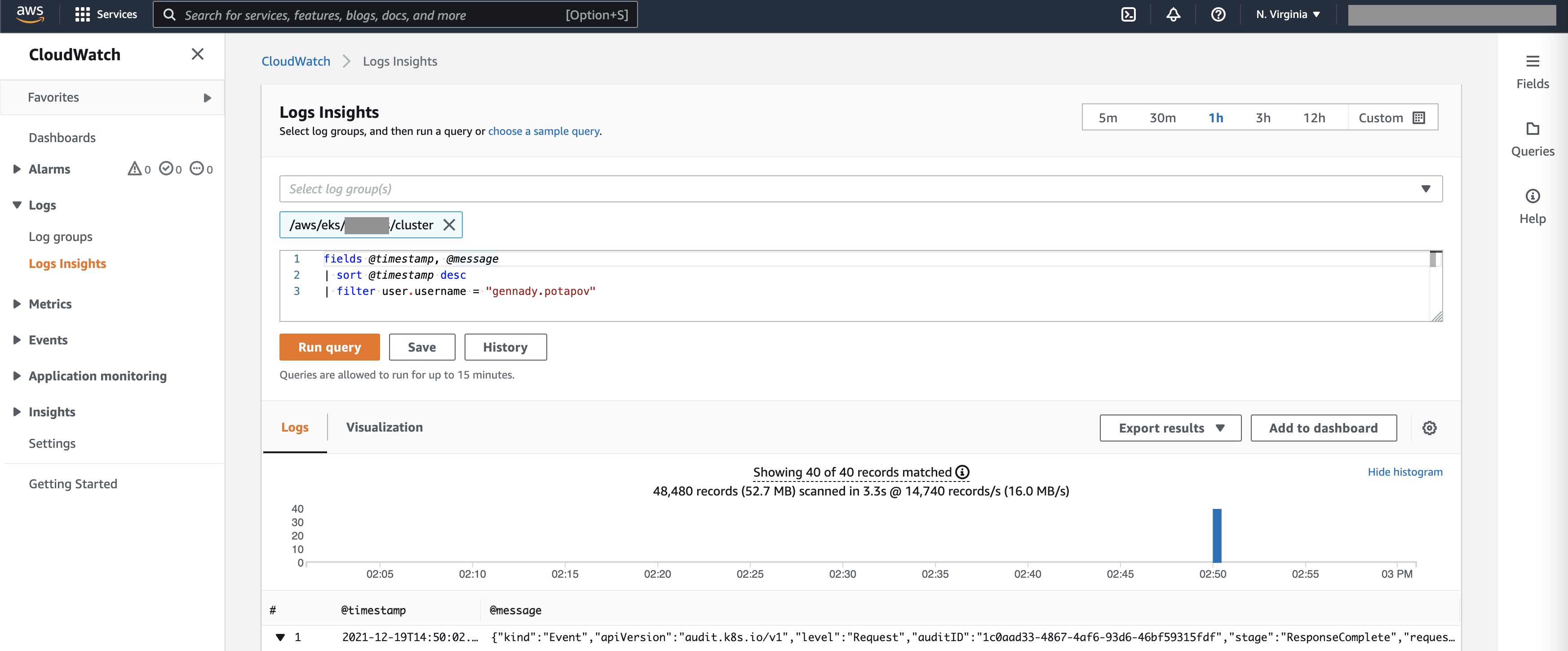

After this, you can go to AWS CloudWatch Logs Insights and run this query:

fields @timestamp, @message

| sort @timestamp desc

| filter user.username = "gennady.potapov"

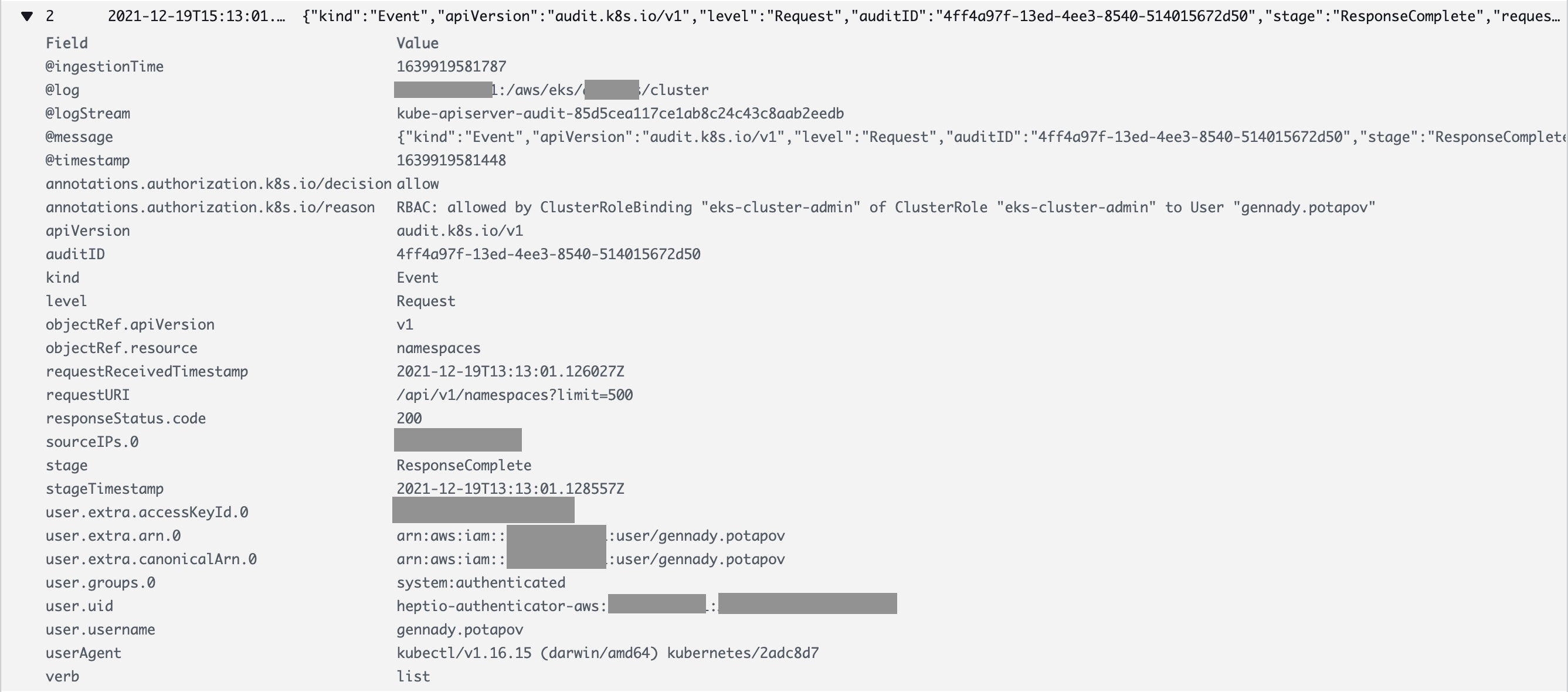

Here is one example log. Some notable fields are:

- RequestURI

- user.extra.username.0

- user.username

- verb

As we can see, the request is traceable, we can see all user activity by inspecting the logs.

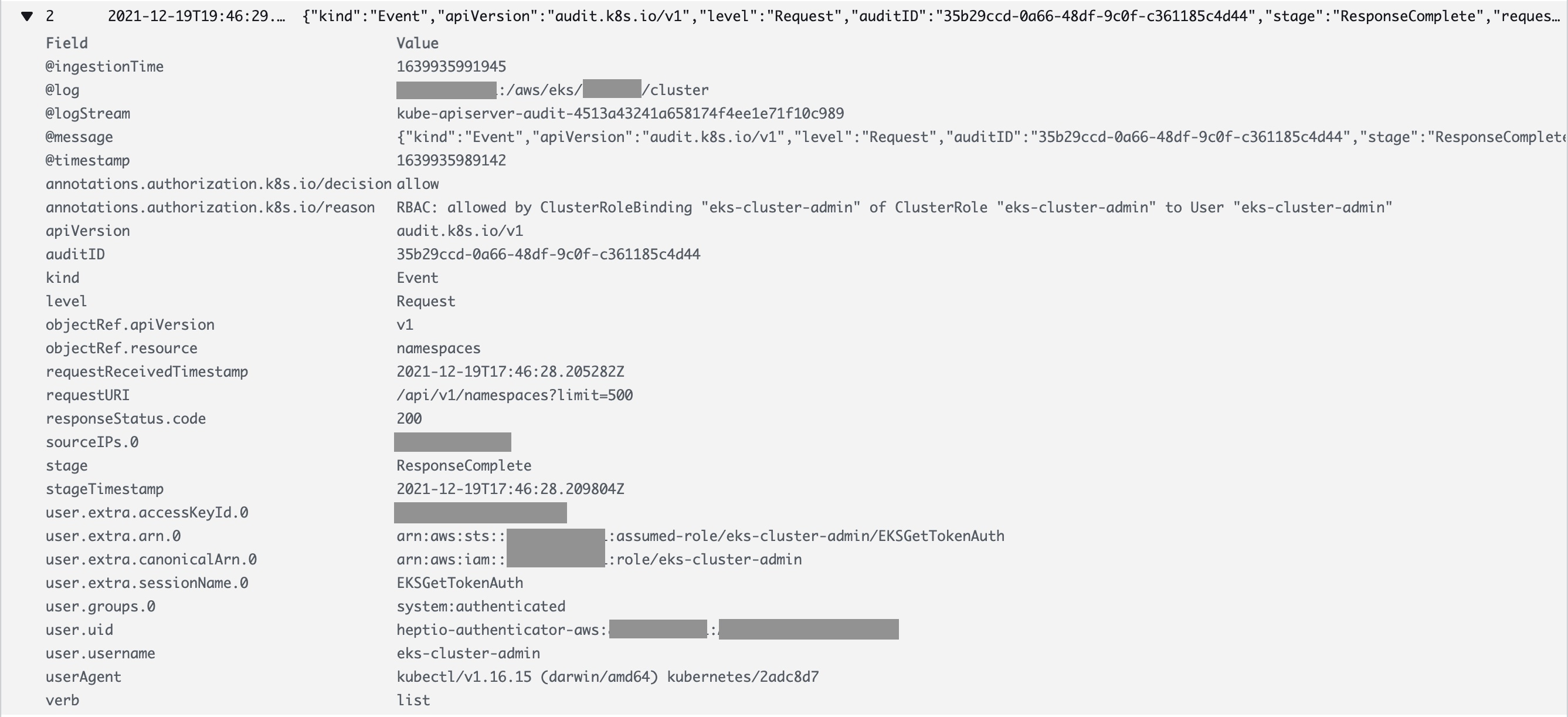

Tracing mapped roles

Now lets see how do the mapped roles look like.

Switch kubeconfig context to userauth: kubectl config use-context roleauth

Perform some actions on the cluster:

kubectl get ns

# ...

kubectl get po -n kube-system

After this, you can go to AWS CloudWatch Logs Insights and run this query one more time:

fields @timestamp, @message

| sort @timestamp desc

| filter user.username = "gennady.potapov"

This will only show our previous API requests, but the new ones do not appear, because the user is actually using a role mapping. Run this query to see the new API requests:

fields @timestamp, @message

| sort @timestamp desc

| filter user.username = "eks-cluster-admin"

This request also shows the APIs used, but it is only partially usable. Username is identified as a generic “eks-cluster-admin” because of the role mapping we did earlier. There is another parameter called “user.uid”, but it also does not help to uniquely identify the user (it does not reflect an AWS user id).

Summary

It is possible to trace user actions identified with IAM to AWS EKS, but the following conditions must be met:

- Audit log must be enabled on the EKS Cluster

- Users must be mapped with “mapUsers” in “aws-auth” ConfigMap

While #1 is easy to set up, #2 might get tricky with many clusters and users.

Solutions

Automatic user synchronization

It is possible to maintain user mapping for a small set of users, but this might get hard as you get more users across different clusters. I implemented the aws-auth-operator as a convenience tool to manage “aws-auth” ConfigMap.

In order to set up AWS IAM to Kubernetes group synchronization, one needs to provide a configuration like:

apiVersion: auth.ops42.org/v1alpha1

kind: AwsAuthSyncConfig

metadata:

name: default

namespace: kube-system

spec:

syncIamGroups:

- source: dev-operator-k8s-admins

dest: dev-operator-k8s-admins

- source: dev-operator-k8s-users

dest: dev-operator-k8s-users

This will populate the “mapUsers” parameter of the “aws-auth” configmap with the contents of the IAM groups and sync them periodically. Assuming the user named ‘john’ is a member of both, ‘dev-operator-k8s-admins’ and ‘dev-operator-k8s-users’ groups, while user ‘fred’ is only a member of the ‘dev-operator-k8s-users’ group in IAM, aws-auth ConfigMap will be modified accordingly:

...

mapUsers: |

- userarn: arn:aws:iam::XXXXXXXXXXXX:user/john

username: john

groups:

- dev-operator-k8s-admins

- dev-operator-k8s-users

- userarn: arn:aws:iam::XXXXXXXXXXXX:user/fred

username: fred

groups:

- dev-operator-k8s-users

You can find the operator here: https://github.com/gp42/aws-auth-operator

OpenID Connect

Alternative solution is to use OpenID Connect (OIDC) identity provider as a method for cluster Authentication. This functionality can be used on AWS EKS v1.16 and later. I am covering this implementation in the AWS EKS OIDC with Google Workspace post.